Introduction

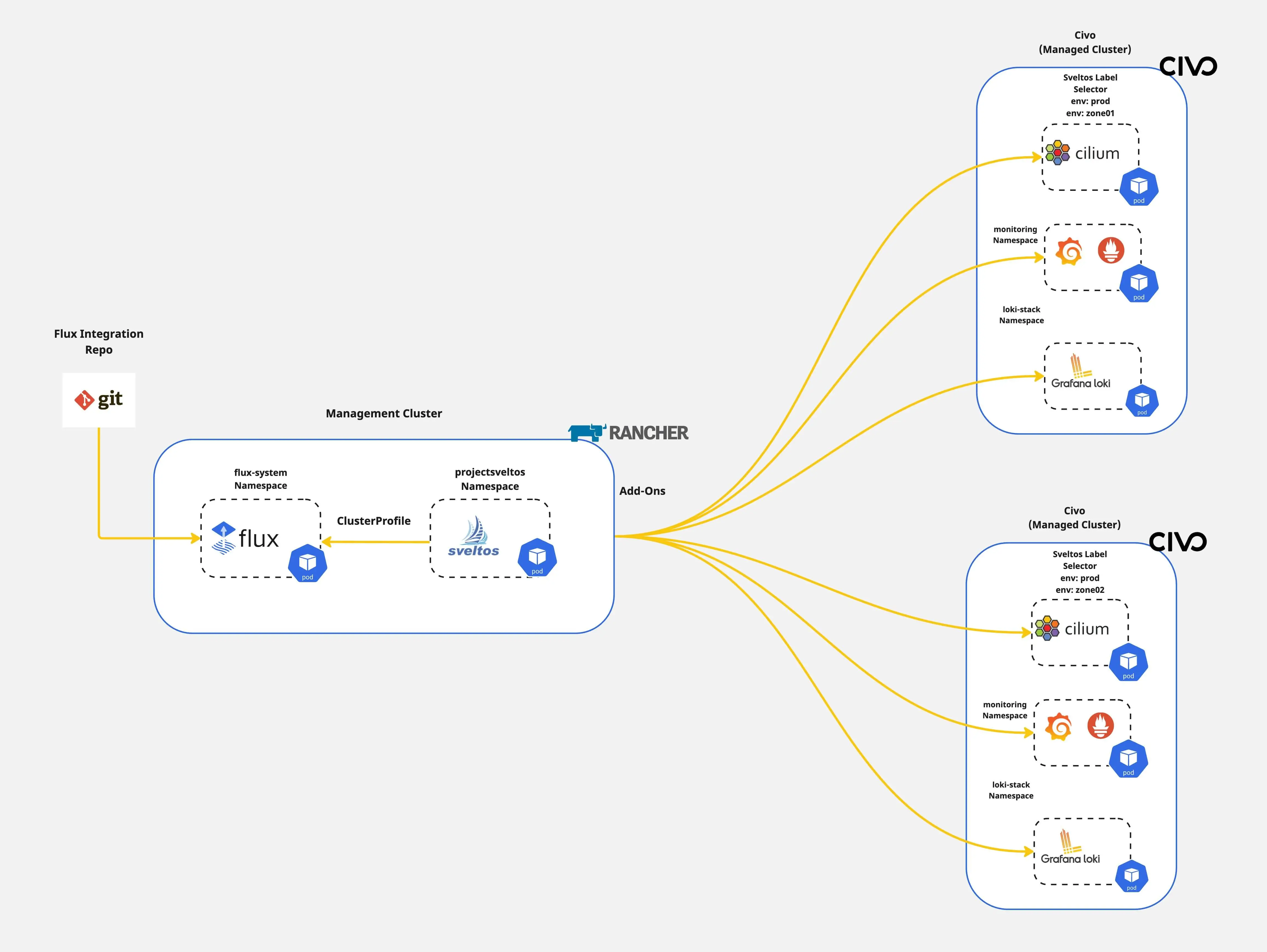

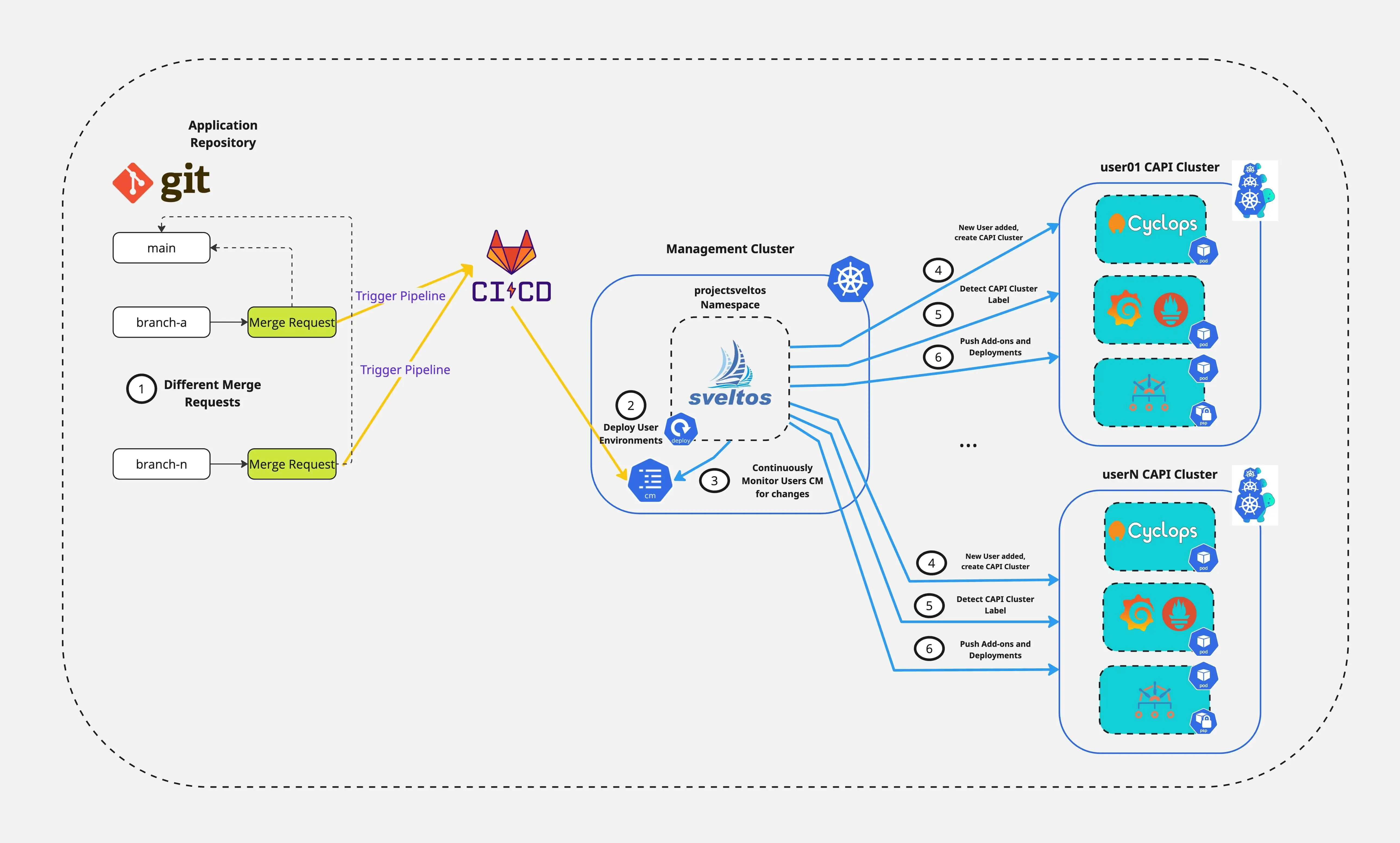

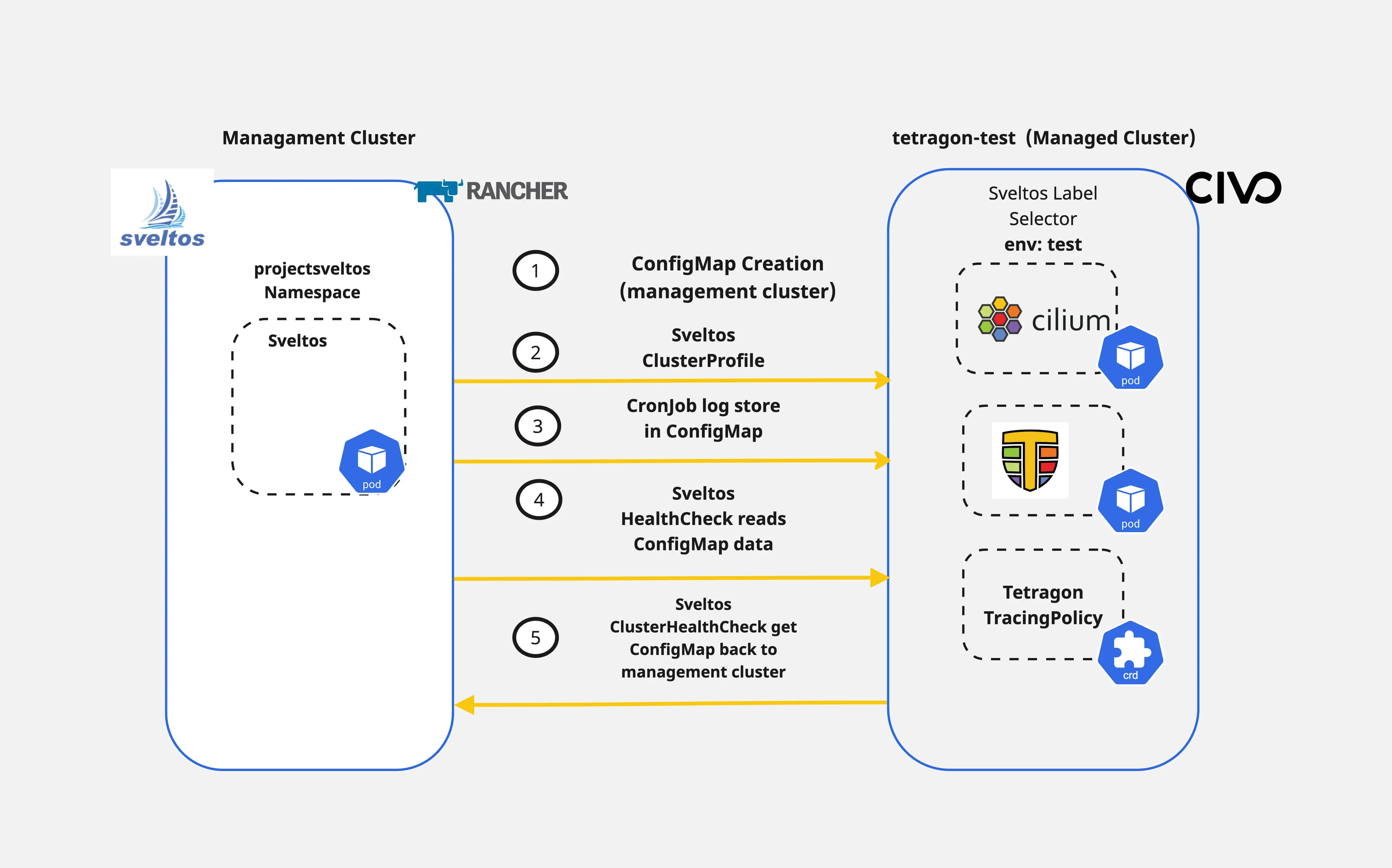

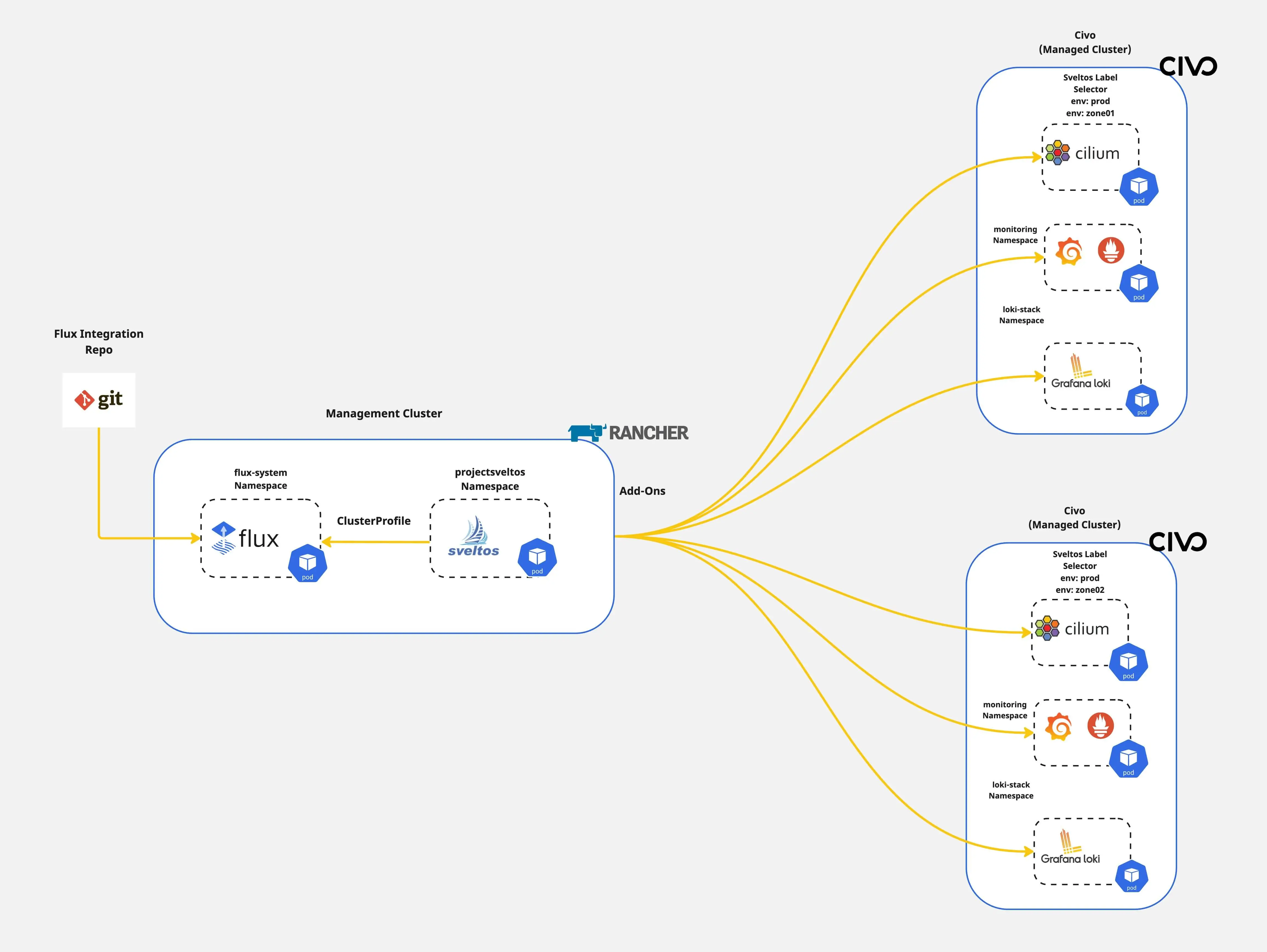

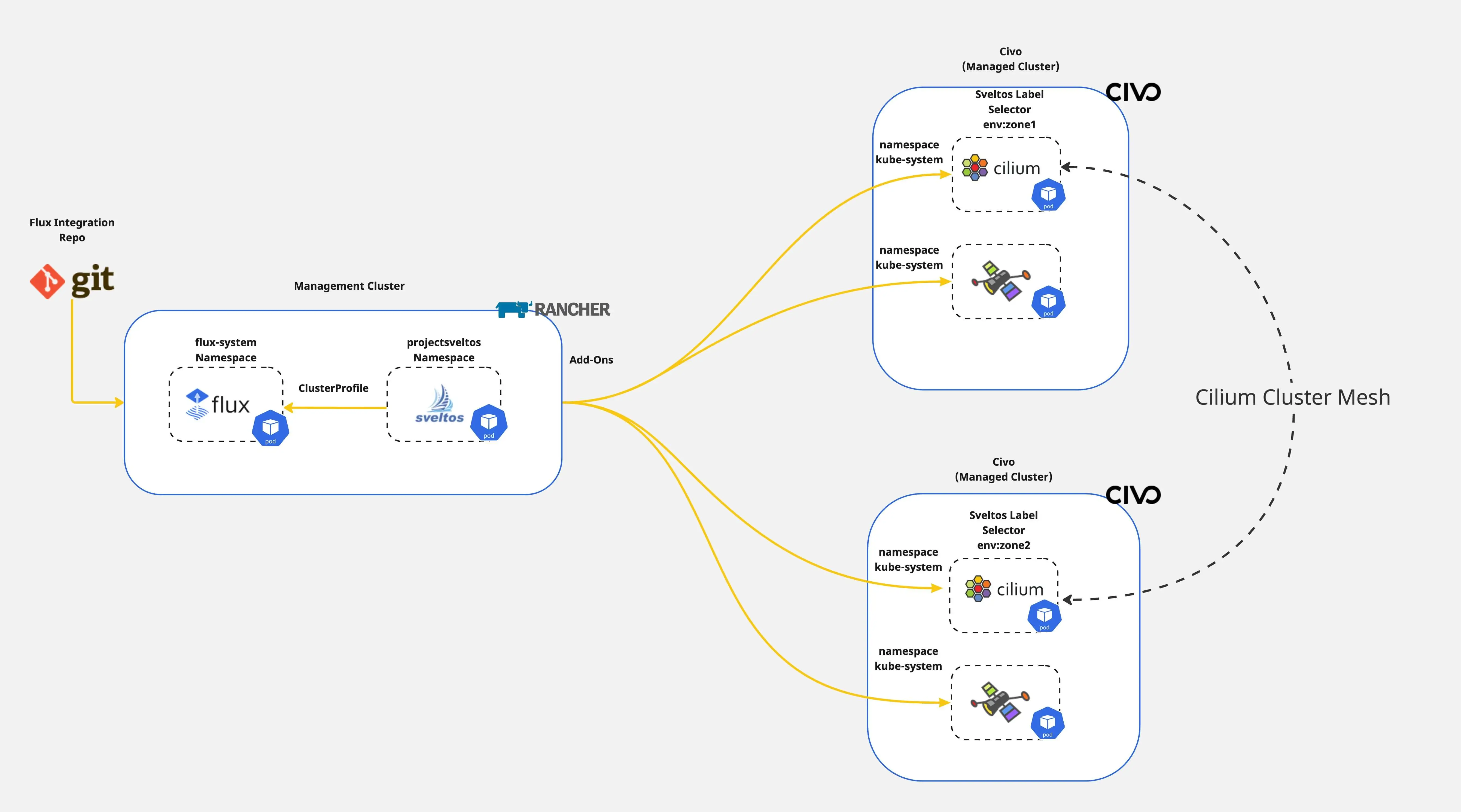

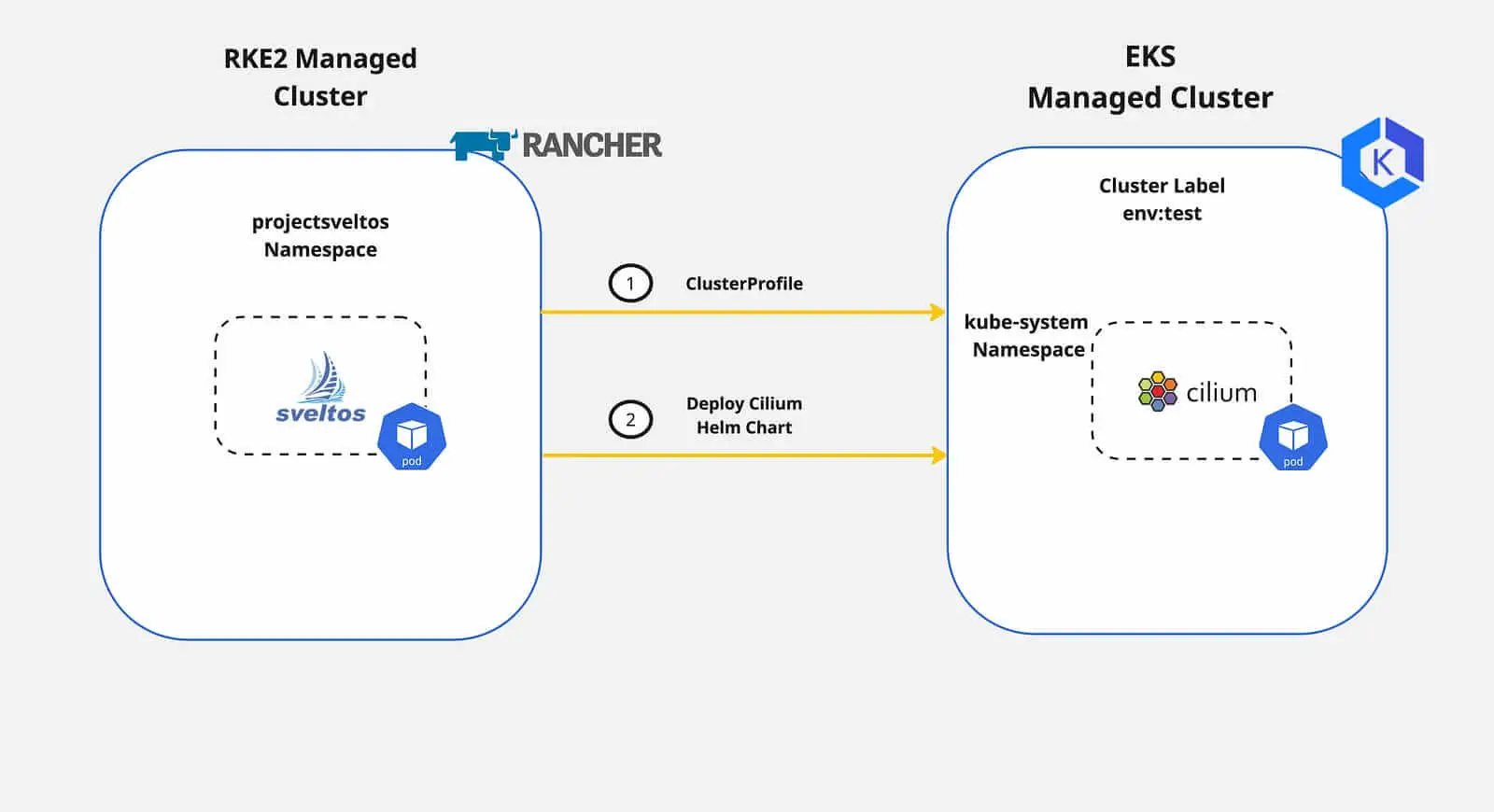

In previous posts, we outlined how Sveltos allows Platform and tenant administrators to streamline Kubernetes applications and add-on deployments to a fleet of clusters. In today's blog post, we will take a step further and demonstrate how easy it is to target and update a subset of resources targeted by multiple configurations. By multiple configurations, we refer to the Sveltos ClusterProfile or Profile Custom Resource Definitions (CRDs). The demonstration focuses on day-2 operations as we provide a way to update and/or remove resources without affecting production operations.

This functionality is called tiers. Sveltos tiers provide a solution for managing the deployment priority when resources are targeted by multiple configurations. They fit into current ClusterProfile/Profile definitions. They also set the deployment order and easily override behaviour.

Today, we will cover the case of updating the Cilium CNI in a subnet of clusters with the label set to tier:zone2 without affecting the monitoring capabilities defined in the same ClusterProfile/Profile.